.png)

Hi Community,

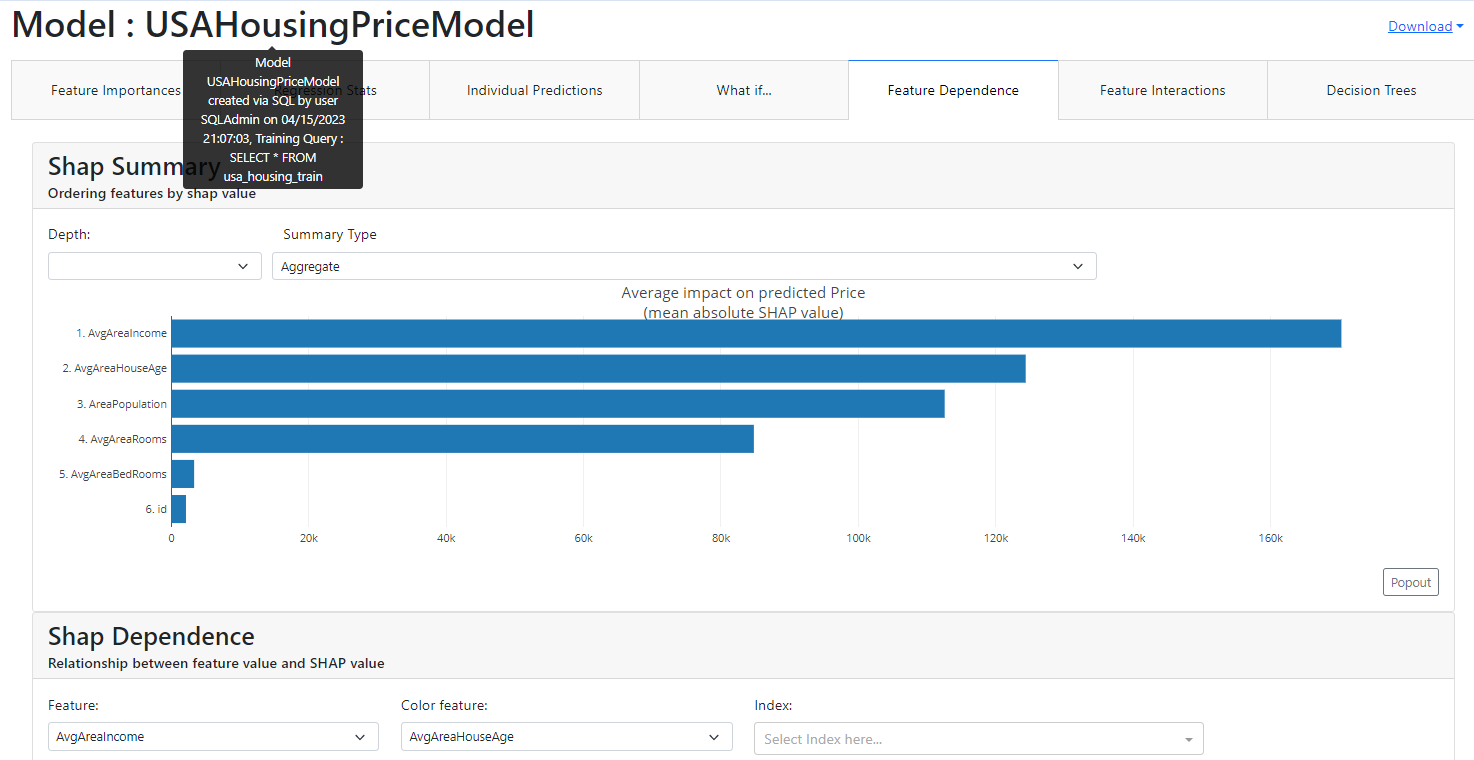

In this article, I will introduce my application iris-mlm-explainer

This web application connects to InterSystems Cloud SQL to create, train, validate, and predict ML models, make Predictions and display a dashboard of all the trained models with an explanation of the workings of a fitted machine learning model. The dashboard provides interactive plots on model performance, feature importances, feature contributions to individual predictions, partial dependence plots, SHAP (interaction) values, visualization of individual decision trees, etc.

Prerequisites

- You should have an account with InterSystems Cloud SQL

- You should have Git installed locally.

- You should have Python3 installed locally.

Getting Started

We will follow the below steps to create and view the explainer dashboard of a model :

- Step 1 : Close/git Pull the repo

- Step 2 : Login to InterSystems Cloud SQL Service Portal

- Step 2.1 : Add and Manage Files

- Step 2.2 : Import DDL and data files

- Step 2.3 : Create Model

- Step 2.4 : Train Model

- Step 2.5 : Validate Model

- Step 3 : Activate Python virtual environment

- Step 4 : Run Web Application for prediction

- Step 5 : Explore the Explainer dashboard

Step 1 : Close/git Pull the repo

So Let us start with the first Step

Create a folder and Clone/git pull the repo into any local directory

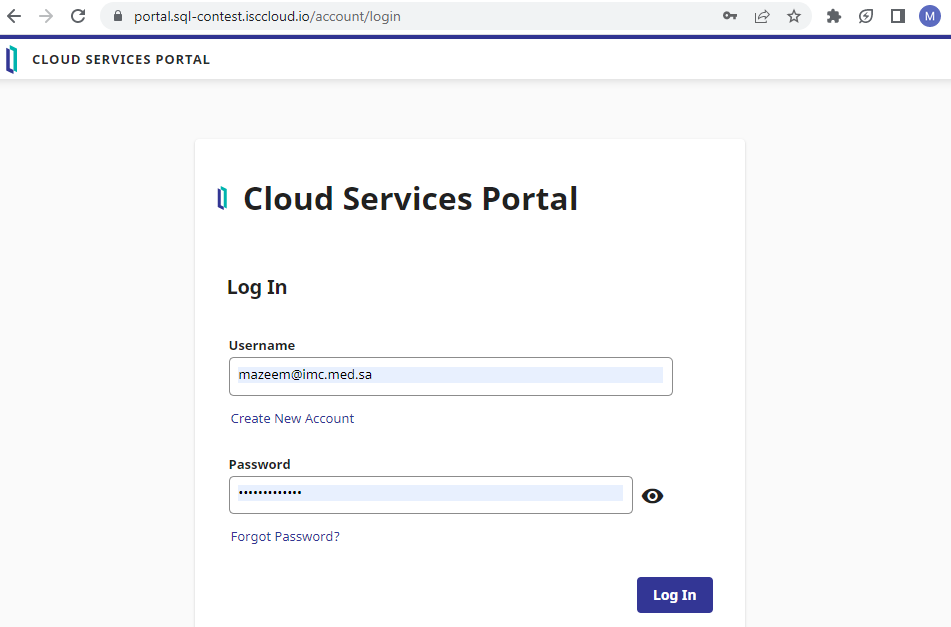

git clone https://github.com/mwaseem75/iris-mlm-explainer.gitShell SessionShell SessionStep 2 : Log in to InterSystems Cloud SQL Service Portal

Log in to InterSystems Cloud Service Portal

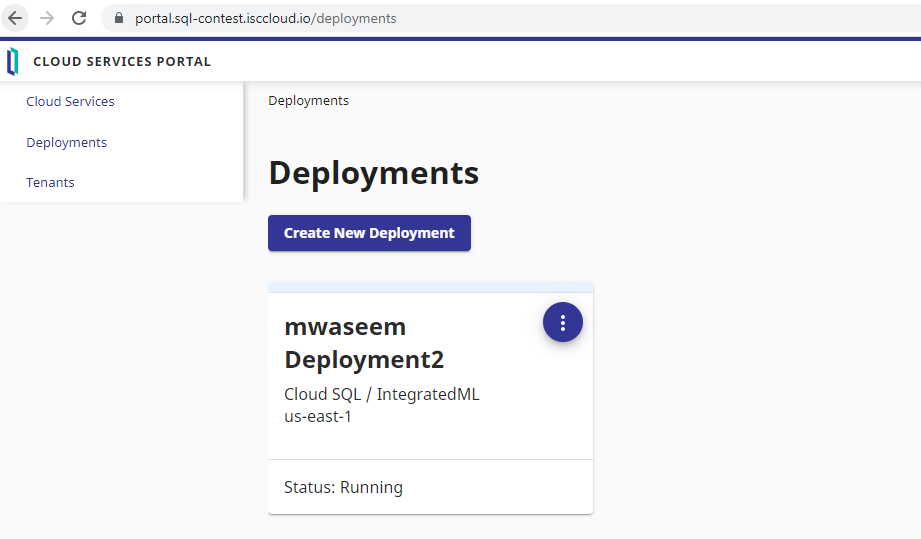

Select the running deployment

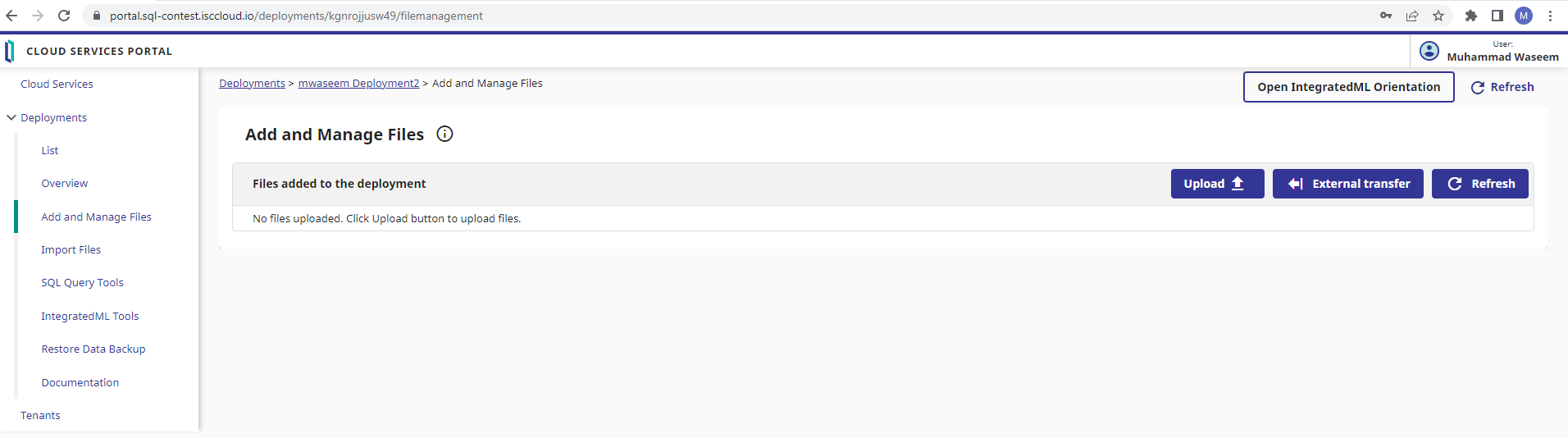

Step 2.1 : Add and Manage Files

Click on Add and Manage Files

Repo contains USA_Housing_tables_DDL.sql(DDL to create tables), USA_Housing_train.csv(training data), and USA_Housing_validate.csv(For validation) files under the datasets folder. Select the upload button to add these files.

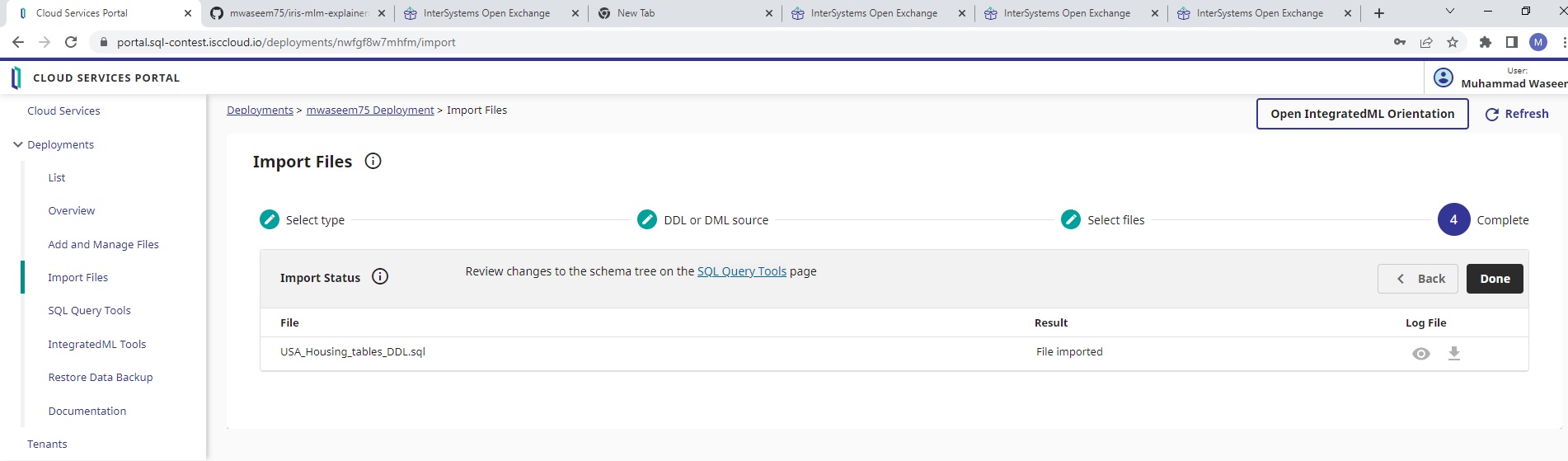

Setp 2.2 : Import DDL and data files

Click on Import files, then click on DDL or DML statement(s) radio button, then click the next button

Click on Intersystems IRIS radio button and then click on the next button

Select USA_Housing_tables_DDL.sql file and then press the import files button

Click on Import from the confirmation dialog to create the table

Click on SQL Query tools to verify that tables are created

Import data files

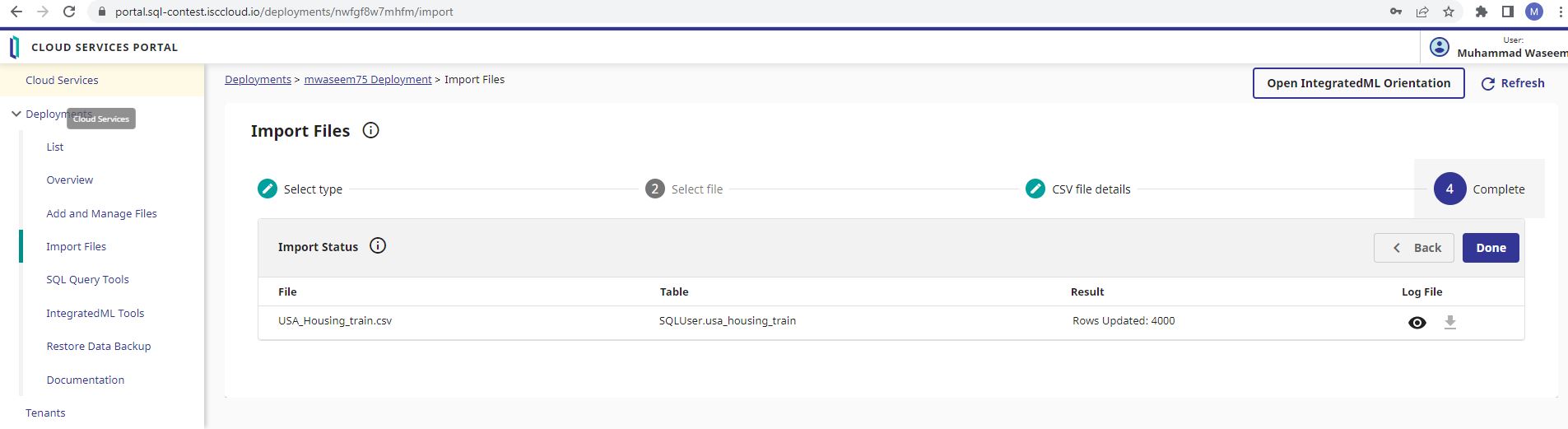

Click on Import files, then click on CSV data radio button, then click the next button

Select USA_Housing_train.csv file and click the next button

Select USA_Housing_train.csv file from the dropdown list, check import rows as a header row and Field names in header row match column names in selected table and click import files

click on import in the confirmation dialog

Make sure 4000 rows are updated

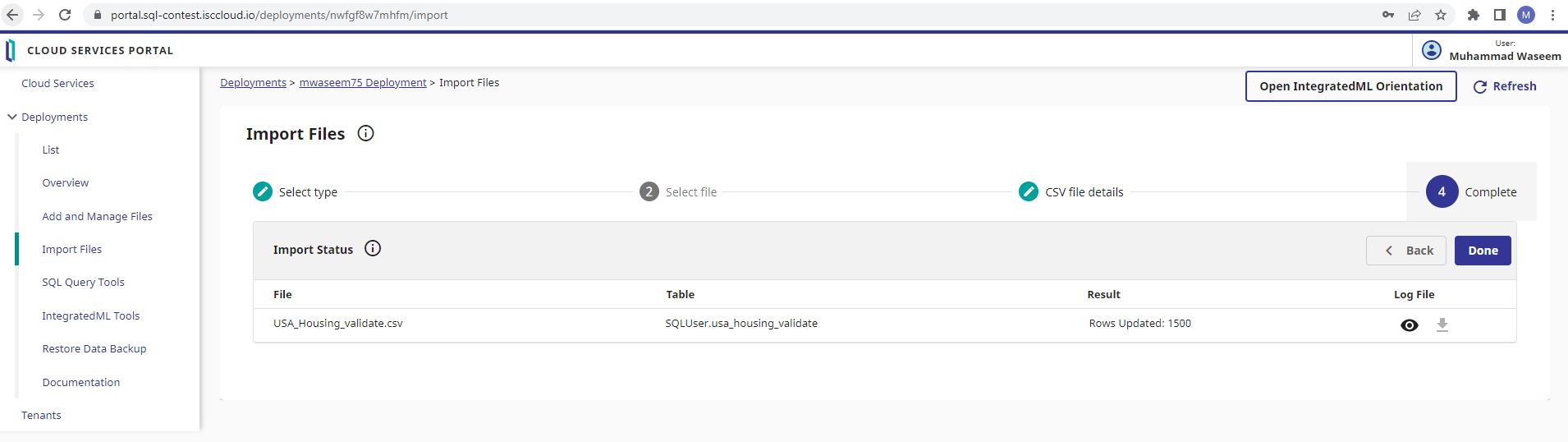

Do the same steps to import USA_Housing_validate.csv file which contains 1500 records

Setp 2.3 : Create Model

Click on IntegratedM tools and select Create Panel.

Enter USAHousingPriceModel in the Model Name field, Select usa_housing_train table and Price in the field to predict dropdown. Click on create model button to create the model

Setp 2.4 : Train Model

select Train Panel, Select USAHousingPriceModel from the model to train dropdownlist and enter USAHousingPriceModel_t1 in the train model name field

Model will be trained once Run Status completed

Setp 2.5 : Validate Model

Select Validate Panel, Select USAHousingPriceModel_t1 from Trained model to validate dropdownlist, select usa_houseing_validate from Table to validate model from dropdownlist and click on validate model button

Click on show validation metrics to view metrics

Click on the chart icon to view the Prediction VS Actual graph

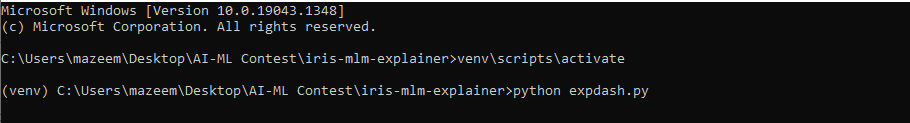

Step 3 : Activate Python virtual environment

The repository already contains a python virtual environment folder (venv) along with all the required libraries.

All we need is to just activate the environment

On Unix or MacOS:

$ source venv/bin/activateShell SessionShell SessionOn Windows:

venv\scripts\activateShell SessionShell Session

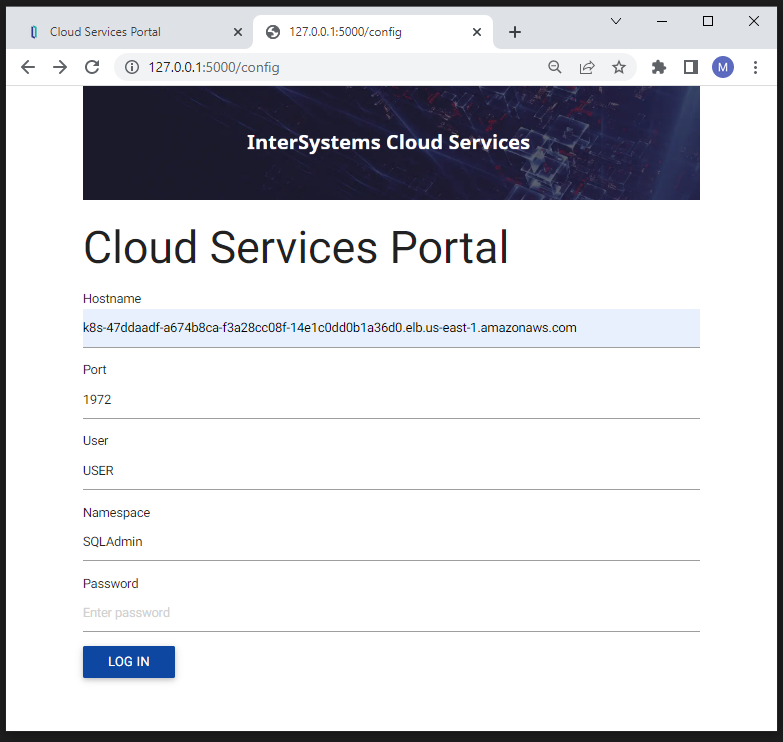

Step 4 : Set InterSystems Cloud SQL connection parameters

Repo contains config.py file. Just open and set the parameters

Put same values used in InterSystems Cloud SQL

Step 4 : Run Web Application for prediction

Run the below command in the virtual environment to start our main application

python app.pyNavigate to http://127.0.0.1:5000/ to run the application

Enter Age of house, No of rooms, No of bedroom and Area population to get the prediction

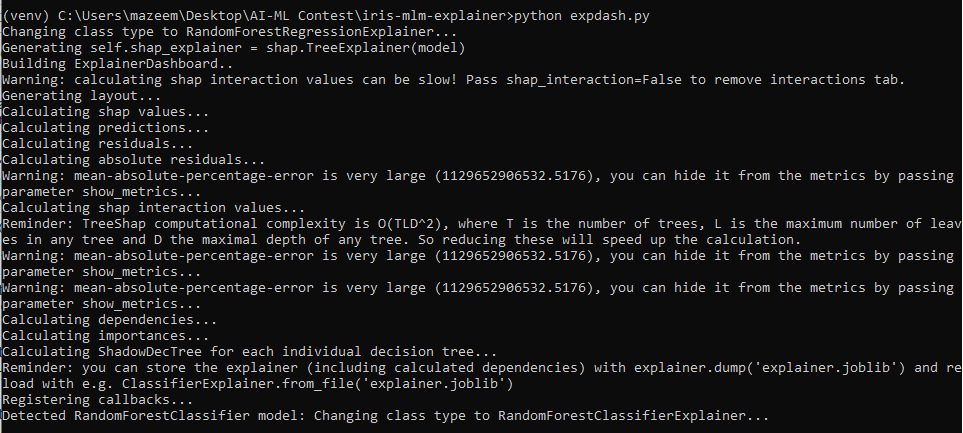

Step 5 : Explore the Explainer dashboard

Finally, run the below command in virtual environment to start our main application

python expdash.pyShell SessionShell Session

Navigate to http://localhost:8050/ to run the application

Application will list all the trained models along with our USAHousingPriceModel. Navigate to "go to dashboard" hyperlink to view model explainer

Feature Importances. Which features had the biggest impact?

Quantitative metrics for model performance, How close is the predicted value to the observed?

Prediction and How has each feature contributed to the prediction?

Adjust the feature values to change the prediction

Shap Summary, Ordering features by shap values

Interactions Summary, Ordering features by shap interaction value

Decision Trees, Displaying individual decision trees inside Random Forest

Thanks

I love it <3, for the more you are using the community driver DB-API :)

Thanks @Guillaume Rongier

great article and app,could you pls also publish this on CN DC? Thx!

Thanks @Michael Lei, Do you mean to publish this article on CN DC? if so then I already requested the translation.

Whoahoo! Thanks for sharing it @Muhammad Waseem

Thanks @Luca Ravazzolo